SQLYoga

Learn SQL and database management at SQLYoga for articles, tutorials, and tips to improve your skills and streamline data operations. Join our community!

April 22, 2024

SQL SERVER: Seamless Migration: On-Premise Report Server to Azure SQL Managed Instance

Recently, we got request from client to migrate their SQL Srrver Reports Server. We have analyzed and review that, they are actively using Data-Driven subscriptions too. So, our major job is to have this migration smooth, which won't disturb their live environment.

Parshwa, from our team, take this challenge and handled it so quietly. Please read here about how he has achieved the same: Seamless Migration: On-Premise Report Server to Azure SQL Managed Instance

Reference : Tejas Shah ( SQLYoga.com )

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

November 17, 2017

SQL Server, T-SQL create Linked Server with Azure SQL Database

Today, we got a requirement to connect our Azure VM SQL (Report server) instance to access the Azure Database. Let me show you how create linked server for SQL Database on Azure from Azure VM SQL instance.

Please follow the mentioned steps to create a Linked Server to Azure SQL Databse:

Step 1: Open SQL Server Management Studio

Step 2: Execute script: "SP_addlinkedserver".

EXEC sp_addlinkedserver @server='AzureDB', -–Provide Linked Server Name @srvproduct='' @provider='sqlncli', @datasrc='xxxxxx.database.windows.net', –provide Azure SQl Server name @location='', @provstr='', @catalog='AzureDatabaseName' –Provide azure database name

Step 3: Execute the script to provide the SQL Server login for the above created linked server.

Exec sp_addlinkedsrvlogin ‘Azure’, ‘FALSE’, NULL, ‘’, ‘ ’;

Step 4: Verify the created linked server, under the Databases > Server Objects > Linked Servers > Your Linked Server Name

Step 5: Access the azure SQL database table. You need to use FOUR part query. [linked server name].[database name].[schema name].[table name]"

SELECT top 10 * FROM [linked server name].[database name].[schema name].[table name]

Reference : Tejas Shah http://www.SQLYoga.com

Labels:

Azure,

Linked Server,

Report Server,

SQL Yoga,

Tejas Shah

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

SQL Server Reporting Services, List out all the data sources with Report Name and Path

In Enterprise application, we can have many reports hosted. Sometimes there are few customized reports too. Over period of time, there may be many Data Sources used by the different reports. To find out Report and its data source, we can use the following query: (Execute on ReportServer Database)

SELECT C2.NAME AS Data_Source_Name , C.NAME AS ReportName , C.Path AS ReportPath FROM ReportServer.dbo.DataSource AS DS INNER JOIN ReportServer.dbo.CATALOG AS C ON DS.ItemID = C.ItemID AND DS.Link IN ( SELECT ItemID FROM ReportServer.dbo.CATALOG WHERE Type = 5 ) --Type 5 identifies data sources FULL JOIN ReportServer.dbo.CATALOG C2 ON DS.Link = C2.ItemID WHERE C2.Type = 5 ORDER BY C2.NAME ASC , C.NAME ASC;

This will gives following result:

Reference : Tejas Shah http://www.SQLYoga.com

Labels:

Data Sources,

Report Name,

Report Path,

SQLYoga,

SSRS Reports,

Tejas Shah

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

August 14, 2015

SQL Server: Use of Recursive Query with example

One of the most benefit of CTE (Common Table Expressions) is that we can create

recursive queries with them.

Recursive query is the efficient way to display hierarchy setup (Parent child relation

ship in the same table). e.g. Grand Parent, Parent, Child. This is common requirement.

To display the parent child relationship in efficient manner, we can use Recursive

CTE. Let me explain it by example.

Create Temporary Table:

DECLARE @Images TABLE ( [ResourceID] [int] NOT NULL, [ChildOf] [int] NOT NULL, [ResourceName] [varchar](250) NOT NULL )

Lets populate some data into this table:

INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (5, 0, N'Mammal') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (6, 5, N'Carnivore') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (14, 6, N'Cannine') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (15, 14, N'Dog') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (16, 15, N'Dog1') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (17, 14, N'Monkey') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (18, 17, N'Monkey1') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (19, 16, N'dog1.01') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (21, 18, N'monkey1.02') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (23, 17, N'test') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (25, 17, N'Monkey 2') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (31, 0, N'Fish') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (32, 31, N'cartilaginous fish') INSERT @Images ([ResourceID], [ChildOf], [ResourceName]) VALUES (33, 32, N'dogfish')

That is how data is stored in the table:

This is not the way that we can understand the hierarchy. Recursive query will help

us to display result like this:

This is much efficient way to list out, as we understand that how the hierarchy

is being maintained. Recursive CTE helps us to generate this kind efficient output.

Let me share the t-sql script which generates the output which we are looking for:

;WITH images

AS (

SELECT *

,ROW_NUMBER() OVER (

ORDER BY ResourceID

) AS RowID

FROM @images

)

,cte

AS (

SELECT 1 AS [Level]

,[ResourceID]

,[ResourceName]

,ChildOf

,cast(cast(RowID AS VARBINARY(4)) AS VARBINARY(max)) AS sort

FROM images

WHERE ChildOf = 0

--where [ResourceID] = 5

UNION ALL

SELECT p.[Level] + 1

,c.[ResourceID]

,c.[ResourceName]

,c.ChildOf

,cast(p.sort + cast(c.RowID AS VARBINARY(4)) AS VARBINARY(max))

FROM images c

INNER JOIN cte p ON p.[ResourceID] = c.ChildOf

--WHERE c.ChildOF=5

)

SELECT ResourceID

,ChildOf

,REPLICATE('.', ([Level] - 1) * 4) + [ResourceName] AS menu

FROM cte

ORDER BY sort

That's it. Here, I have used first CTE, to assign the Unique numbers. Then just

iterate thru each record and find their related children records. Hope this helps

you.

Reference : Tejas Shah http://www.SQLYoga.com

Labels:

CTE,

Display Hierarchy,

Recursive CTE,

SQL,

SQLYoga,

T-SQL tip,

Tejas Shah

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

June 1, 2015

SQL Server, avoid encoding special character when using FOR XML PATH

From SQL 2005, we are using FOR XML PATH('') for the string concatenation. I have also mentioned the same in very old blog: Generate Comma Separated List with SELECT statement.

Today, we face an issue when special character gets encoded and we hate to have special character in my string.

e.g: Table has records like:

1. Gynecology & Obstetrics

2. Dermatology

Expected output (after concatenating two rows): Gynecology & Obstetrics, Dermatology.

When we used FOR XML PATH, we got the output as:

Gynecology & Obstetrics,Dermatology ("&" character is encoded and it lists out "&")

This output was not the one which we were expected. To get the expected output, we need to find workaround and we finally get it as:

SELECT STUFF(((

SELECT ',' + RTRIM(CONVERT(VARCHAR(50), sm.Speciality))

FROM Table1 T2

INNER JOIN Table2 sm ON sm.ID = t2.Speciality_ID

FOR XML PATH(''),TYPE).value('.[1]', 'varchar(max)')

), 1, 1, '')

After adding "TYPE", we are able to get the expected output: Gynecology & Obstetrics, Dermatology

Reference : Tejas Shah (http://www.SQLYoga.com)

Today, we face an issue when special character gets encoded and we hate to have special character in my string.

e.g: Table has records like:

1. Gynecology & Obstetrics

2. Dermatology

Expected output (after concatenating two rows): Gynecology & Obstetrics, Dermatology.

When we used FOR XML PATH, we got the output as:

Gynecology & Obstetrics,Dermatology ("&" character is encoded and it lists out "&")

This output was not the one which we were expected. To get the expected output, we need to find workaround and we finally get it as:

SELECT STUFF(((

SELECT ',' + RTRIM(CONVERT(VARCHAR(50), sm.Speciality))

FROM Table1 T2

INNER JOIN Table2 sm ON sm.ID = t2.Speciality_ID

FOR XML PATH(''),TYPE).value('.[1]', 'varchar(max)')

), 1, 1, '')

After adding "TYPE", we are able to get the expected output: Gynecology & Obstetrics, Dermatology

Reference : Tejas Shah (http://www.SQLYoga.com)

Labels:

Comma Separated List,

Encoding,

For XML,

For XML PATH TYPE,

SQL Server,

SQL Yoga,

String Concate,

T-SQL,

Tejas Shah

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

April 4, 2015

SQL SERVER: Find Current Location of Log File(s) with File Size of All the Database

As we are mostly engaged in SQL Assignments, we come to the place where we found that there are lots of ldf files which occupies lots of the space on the DISK. To continue my work, I have to make a room on the same DISK. I found that there are lots of log files (LDF) on the disk which occupies more than 1 TB (1024 GB). This is the development server and I found and confirmed that we can have “Recovery Option” as “SIMPLE” too. The tricky part over here is, these databases are already created and Log file names are not same as database name. So, first of all I need to find out the database name to which I can make Recovery Option as Simple. Finally, I got following query which helps me to continue my assignment as follows:

SELECT d.name As DatabaseName,m.name As FileName,

m.physical_name As PhysicalFileName,

(m.size * 8.0) / 1024 / 1024 As SizeInGB

FROM sys.master_files m

INNER JOIN sys.databases d ON d.database_id = m.database_id

WHERE m.Type = 1

It will give us the list as follows:

Reference: Tejas Shah (www.SQLYoga.com)

Labels:

Current location of log files,

SQL Log file size,

SQL Server,

SQL Tips,

SQL Yoga,

Tejas Shah

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

September 4, 2014

SQL SERVER: SSIS - Rename and move files from source folder to destination folder

| With SSIS, most of the time we need to process all the files from the folder. Once file has been processed, we need to move the file to archive folder, so we should know that file has been processed and we have the file in archive folder. Here, We are going to process the files and then will move the file from source folder to archive folder by appending date and time to the filename, so we can use it for future reference. SSIS will do both of these things, Rename a file and move a file, with File System Task with operation “Rename file”. Let’s review how it works: 1. Add Variables:To configure Source folder and Archive Folder by variable

Here, we are adding Current Date time to the filename by expression as mentioned below.

3. Add For each Loop Container and set properties :Now, lets loop thru the folder and process each file from the folder by “For Each loop”. Here, we can setup the folder by Expressions->Directory use Expression @[User::SourceFolder]. We should also specify which type of files we are going to process, like “txt”, “csv” etc..by specifying the same in “Files”. We are fetching the file name with extension so that option needs to be selected as displayed in the following screenshot.  We need to assign each file name to the variable, by Variable Mappings->set variable [User::FileName] and Index as 0  4. Add File System Task:Add “File System Task” inside “For each Loop Container”  5. Set properties for File System Task:This is the place where we need to setup the operation, which will do our job. A. Configure SourceVariable B. Configure DestinationVariable C. Select operation: “Rename File”, which will rename the file and move it to the Archive Folder as we have specified in variable.  6. Run package and check  With SSIS, it is much simple to process multiple files as mentioned above. Reference: Tejas Shah (www.SQLYoga.com) |

Labels:

File System Task,

for each loop,

Move Files,

Process all files with SSIS,

Rename Files,

SQLYoga,

SSIS,

Tejas Shah

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

September 1, 2014

SQL SERVER: SSIS - Conditional Split Data Flow Transformation Task

| Conditional Split transform use split the source row in easy to multiple groups in data flow and which Destination table populated. Lets review the same by reading a Sample text file and separate out the data in two groups. 1. Create sample text file: This text file is piped delimited and last row in text Total Row count

2. Create sample table: Create sample destination table in Test database 1: CREATE TABLE [dbo].[Employee](2: [EmployeeId] [int] IDENTITY(1,1) NOT NULL,3: [EmployeeNumber] [int] NULL,4: [EmployeeName] [varchar](50) NULL,5: CONSTRAINT [PK_Employee] PRIMARY KEY CLUSTERED6: (7: [EmployeeId] ASC8: )9: )10: GO 3. Add Data Flow Task: Add data flow task in Package for transfer data source text file “Employee.txt” to sql server table “dbo.Employee”  4. Add new Flat File Connection: Right click on Connection Manager and add new Flat File connection  5. Set Flat file source properties: Flat file source properties set Connection manager Name, File Name (Source full filepath), column names in the first data row as a true  6. Add Flat file source: Add “Flat File Source” in “Data flow Task” and set properties Flat file connection manager and retain null values from the source as null values in the data flow as true and click button on preview and see in this file extra row include in text file  7. Add Conditional Split Transformation in Data Flow Task: Add Conditional Split Transformation in data flow task for split row  8. Set Condition Split Properties: Set properties Condition split where EmployeeNumber equal to “Total Row count” then this rows not use and other row use to process.  9. Add new OLE DB Connection: Right click on Connection Managers and add new OLE DB Connection  10. Set OLE DB Connection properties OLE DB Connection properties set Server Name, Connection to a database and click on ok button  11. Add OLE DB Destination: Add “OLE DB Destination” in “Data flow Task” and set properties OLE DB Connection Manager and Name of the table or the view  12. Set Input output selection : Set Input output selection between Condition spit and OLE DB Destination   13. Run package and Check :   In this way we get split the information and use as per the requirement, Reference: Tejas Shah (www.SQLYoga.com) |

Labels:

Conditional Split Task,

Data Flow Tranformation,

Read File,

Row Sampling,

SQL Tips,

SQLYoga,

SSIS,

SSIS Tasks,

Tejas Shah

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

August 6, 2014

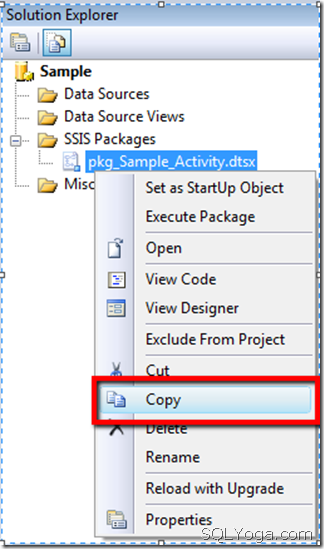

SQL SERVER: Clone SSIS Package

Labels:

Copy SSIS Package,

SQL Server,

SQL Tips,

SQLYoga,

SSIS,

Tejas Shah

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

June 14, 2014

SQL Yoga : Certificate Expired, Mirroring Stopped

Database Administrators might face this issue while certificate gets expired and database mirroring (Non domain database mirroring) gets disconnected as two servers, Primary and Secondary cannot communicate with each other. The error message can be found in Log as follows:

In this post, We are going to review step by step process to resolve this issue by providing renew parameters with certificate configurations. 1. Create a new certificate with longer endpoint (on Principal): |

1: -- Create a new certificate for the endpoint2: USE master;3: CREATE CERTIFICATE [Principal_Certificate_New]4: WITH SUBJECT = 'Principal Certificate',5: START_DATE='01/01/2014', -- Provide date prior to current date6: EXPIRY_DATE='12/31/2020'; -- Provide this as future date7: GO

2. Take backup of the newly created certificate (on Principal):

1: USE master;2: BACKUP CERTIFICATE [Principal_Certificate_New] TO FILE = N'F:\Backup\Principal_Certificate_New.cer';3: GO

3. Set mirroring to use the newly created certificate (On Principal):

1: ALTER ENDPOINT DBMirrorEndPoint2: FOR DATABASE_MIRRORING (AUTHENTICATION = CERTIFICATE [Principal_Certificate_New])3: GO

4. Delete the old certificate for endpoint (on Principal):

1: USE master;2: DROP CERTIFICATE [Principal_Certificate_Old]3: GO

5. Drop the Old Certificate for Principal Login (on Mirror):

1: USE master;2: DROP CERTIFICATE [Principal_Certificate_Old]3: GO

6. Create a new certificate from the principal backup file (on Mirror):

1: USE master;2: CREATE CERTIFICATE [Principal_Certificate_New] AUTHORIZATION PrincipalServerUser3: FROM FILE = N'F:\Backup\Principal_Certificate_New.cer';4: GO

7. Create a new certificate with longer endpoint (on Mirror):

1: use master;2: CREATE CERTIFICATE Mirror_Certificate_New3: WITH SUBJECT = 'Mirror Certificate New'4: ,EXPIRY_DATE = '12/31/2020' -- Provide this as future date5: GO

8. Take backup of newly created certificate (on Mirror):

1: USE master;2: BACKUP CERTIFICATE [mirror_new_cert] TO FILE = 'F:\Backup\Mirror_Certificate_New.cer';3: GO

9. Set mirroring for newly created certificate (on Mirror):

1: USE master;2: ALTER ENDPOINT DBMirrorEndPoint3: FOR DATABASE_MIRRORING (AUTHENTICATION = CERTIFICATE [Mirror_Certificate_New])4: GO

10. Drop the old certificate for endpoint (On Mirror):

1: USE master;2: DROP CERTIFICATE [Mirror_Certificate_Old]3: GO

11. Drop the old certificate for Mirror login (On Principal):

1: USE master;2: DROP CERTIFICATE [Mirror_Certificate_Old]3: GO

12. Create a new certificate from the mirror backup file (On Principal):

1: USE master;2: CREATE CERTIFICATE [Mirror_Certificate_New] AUTHORIZATION MirrorServerUser3: FROM FILE = 'F:\Backup\Mirror_Certificate_New.cer'4: GO

13. Resume the mirroring session for each database(On Principal and Mirror):

1: USE master;2: ALTER DATABASE [Mirrored_Database_Name] SET PARTNER RESUME3: GO

By following the mentioned steps, we can resolve the certificate issues for Mirroring database and Mirroring will be resumed.

Note : 1. Always prefer to provide the endpoint date as far date, so that issue doesn’t occur very soon. 2. During this process, Primary database is available without any interruption.

Reference: Tejas Shah (www.SQLYoga.com)

Labels:

Certificates,

Database Mirroring,

DBA,

SQL Server,

SQL Yoga

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

18+ years of Hands-on Experience

MICROSOFT CERTIFIED PROFESSIONAL (Microsoft SQL Server)

Proficient in .NET C#

Hands on working experience on MS SQL, DBA, Performance Tuning, Power BI, SSIS, and SSRS

Subscribe to:

Comments (Atom)